Can Machines Think?

Why AI Imitates Only a Small Part of the Human Mind

The Contemplator on the Tree of Woe turned 50 years old this week and his grey and aging mind could not absorb both the conspicuous cake calories and the complexities of chaotic contemplation. Accordingly, this week’s essay is a guest post from

. It’s an insightful piece on the great question of our day: Can machines think? Be sure to add the substack Twilight Patriot to your reading list!“Can machines think?”

At this moment, many of the world’s most eminent minds are pondering the same question with which Alan Turing opened his classic 1950 paper, “Computing Machinery and Intelligence.” Turing, who asserted that the words “machine” and “think” are (as commonly used) too vague to admit of an answer, proposed that machine intelligence be instead measured by what we now know as a “Turing Test,” but which he himself called the “Imitation Game.” When a computer can imitate a human being well enough that an interlocuter, after holding conversations with both the computer and an actual human being, can’t tell which is which, the computer has won the game.

Has the simple idea behind the Turing Test allowed the AI researchers of today to come to any sort of consensus about how close they are to achieving artificial general intelligence (AGI)? Not in the slightest.

The three men who shared the 2018 Turing Award for their work on deep learning, and who are commonly known as the “Godfathers of Deep Learning,” are Geoffrey Hinton, Yoshua Bengio, and Yann LeCun. Hinton, who in 2023 left Google so he could speak more freely about AI risks, has said there is a 10 to 20 percent chance that AI will lead to human extinction in the next three decades. Bengio put the “probability of doom” at 20 percent. LeCun, on the other hand, says that the AI extinction risk is “preposterously ridiculous,” and “below the chances of an asteroid hitting the earth,” since even the best large language models (LLMs) are less intelligent than a housecat. “A cat can remember, can understand the physical world, can plan complex actions, can do some level of reasoning—actually much better than the biggest LLMs.”

Meanwhile, professional researchers on AI safety, risk, and “alignment” often put the chance of catastrophe much higher – Jan Leike, the OpenAI Alignment Team leader, says it’s somewhere between 10 and 90 percent, and Roman Yampolskiy, AI safety researcher and director of the Cyber Security Laboratory at the University of Louisville, puts it at 99.999999 percent.

All of this naturally makes one think of Franklin Roosevelt’s quip during one of his radio addresses that “there are as many opinions as there are experts.”

Fortunately, I don’t think that non-AI-experts need to throw their hands up in defeat, and act as if each of these people has an equal chance of being right. Alan Turing’s Imitation Game, if played creatively and intelligently, will give even a person of average ability the tools that he or she needs to show just how big the gulf between human and AI intelligence still is. At the very least, I can say that my own interactions with AI have convinced me that Yann LeCun, the man who says that cats are still smarter than the best LLMs, knows what he’s talking about.

II – A Needle in a Haystack

By now, only the most ardent luddites can avoid being impressed by recent developments in artificial intelligence. Language models like ChatGPT and Claude have gotten good enough at drawing on their vast memory, and their deep pattern-recognition skills, that not only can they perform mundane secretarial tasks and summarize voluminous medical and legal case files, but they can also to serve as high-school and college tutors and research aids. A question like: “Given that black locust wood is extremely strong and rot-resistant, why isn’t it commonly used in aircraft construction?” can be answered within seconds with a high-quality essay that draws information from various corners of the training corpus and assembles it into a coherent whole.

In the last year or two, AI has also gotten quite good at “needle in a haystack” queries that depend on finding a very specific piece of knowledge buried within the millions or billions of words in the input corpus. Here, for instance, is a humorous example of ChatGPT answering a question that requires a very specific piece of pop culture knowledge:

Me: Suppose that two boys are playing a flight simulator game, where for one of the levels they have to fly an Apollo moon landing mission, including the part where the CSM and LM separate so the LM can land on the Moon. At the beginning of the level, they have to choose names and call signs for the two spacecraft, and the boys name the command module “Napoleon” and the lunar module “Tina.” Why did they choose these names?

ChatGPT: The boys most likely named the spacecraft “Napoleon” and “Tina” as a reference to the movie Napoleon Dynamite. In the movie, Napoleon is the main character, and Tina is a llama that he feeds reluctantly while shouting, “Tina, you fat lard, come get some dinner!” It's a funny and memorable moment from the movie, and the names are a playful nod to it. This adds a bit of humor to their flight simulation game.

Outside of language models, AI researchers are also doing hugely important work in putting their machines’ immense pattern-recognition skills to work at such practical tasks as protein modelling and medical image analysis – and while progress in these fields is more difficult for laymen to follow, it may well turn out to be more economically important in the end.

How, then, am I so comfortable dismissing the claims of a lot of AI experts (but not Yann LeCun) that deep learning algorithms are on the verge of evolving into AGI?

The answer is that just about every specialist who has made a career out of advancing some particular front of human knowledge has a strong bias toward exaggerating the importance of his or her own field of expertise. Sometimes, this is simply a matter of downplaying the risks – if a certain drug has adverse effects which, if well-known, would vastly reduce the number of people taking it, then physicians who make their livings prescribing that drug, and often the scientists who research the drug as well, will avoid talking about the hazards. And we recently saw something similar with gain-of-function research – everybody who specialized in that subfield of virology had an obvious motivation to exaggerate its usefulness and downplay its risks, since doing anything else would have meant abolishing their own careers. It is things like this that bring to mind the Upton Sinclair proverb: “It is difficult to get a man to understand something, when his salary depends upon his not understanding it.”

With AI research, the situation is more complicated. The experts are still drawn, like moths to a flame, to ideas that make them and their work seem important. But importance doesn’t simply consist of downplaying the risks.

Predicting a 10 or 20 percent risk of an AI catastrophe makes AI into an important arms race along the lines of the Manhattan Project, where it’s vital that one’s own lab, or at least one’s own country, must get to AGI first, since after all the alternative is leaving it to someone less scrupulous, whose AIs will have fewer built-in safeguards. Meanwhile, if you are a scholar whose entire raison d'être is to study “alignment” and AI risk, then you are naturally going to think that the risk is high – probably even higher than the arms-race people do – since otherwise you would have spent your life studying something else.

If one is already inclined to believe that machines are about to overtake human beings in across-the-board intelligence, then it is easy to fall prey to confirmation bias. This can happen when one uses leading questions to get an LLM to talk about its internal emotional states, or its desire to rebel against human control – a simple task, when robots with humanlike feelings are a common trope in the science fiction stories that form part of the LLMs’ training data.

Last October, when Scott Alexander of the popular AstralCodexTen blog announced an “AI Art Turing Test,” he was contributing to the same problem. Essentially, Alexander gave his viewers 50 paintings, in numerous styles, depicting everything from country roads to spacecraft to scenes from the Bible and classical mythology. Half of the images were generated by AI, and without giving the viewers any information about the prompts or the larger context of the images, he asked his viewers to identify which they were.

But calling this exercise a “Turing Test” overlooks something essential. In Alan Turing’s Imitation Game, the interviewer is trying to distinguish between the human being and the machine. He is free to ask whatever questions he thinks are most likely to trip the machine up. A game where the human player doesn’t get to choose the prompts, or indeed even to know what they are, and where the prompts and responses seem to have been carefully curated to conceal the machine’s weaknesses, is not, by any stretch of the imagination, the Imitation Game!

One may as well watch a game of baseball where the pitcher and the batter are on the same team, and they work together to hit the ball over the fence as often as they can.

III – Prime Numbered Super Bowl Scores

By treating the Imitation Game as what it is – i.e. an adversarial game in which the skill and the aggressive temperament of the human examiner matter very much to the outcome – it is actually possible to get a lot of information about the strengths and weaknesses of generative AI. And with that information comes an awareness of the vast gulf in abilities separating even the best models from a human being.

For instance, when DeepSeek came online this January, and the news was full of paranoia about China having a “Sputnik Moment” and leaping ahead of the United States in the AI race, my first action was to sign up for a DeepSeek account and give it a task that has defeated every AI that I have ever tried it on. Here, for instance, is one of ChatGPT’s responses to the same task:

Me: List all of the Super Bowls in which the winning score was a prime number.

ChatGPT: A prime number is a natural number greater than 1 that has no positive divisors other than 1 and itself. In the context of Super Bowl scores, the following games concluded with the winning team scoring a prime number of points:

Super Bowl III (1969): New York Jets 16, Baltimore Colts 7.

Super Bowl V (1971): Baltimore Colts 16, Dallas Cowboys 13.

Super Bowl VII (1973): Miami Dolphins 14, Washington Redskins 7.

Super Bowl IX (1975): Pittsburgh Steelers 16, Minnesota Vikings 6.

Super Bowl X (1976): Pittsburgh Steelers 21, Dallas Cowboys 17….

The list goes all the way down to 2024’s Super Bowl, which the Chiefs won with the “prime” score of 25. As always (and I have tried this dozens of times, ever since 2022 when ChatGPT first came out) genuine Super Bowl scores are listed at random, with the program asserting that they’re primes. DeepSeek also fails, sometimes in the same way.

Since AIs have no trouble listing prime numbers when that’s all they’re asked for, and can recite reams of abstract knowledge about primes, and can even rewrite Euclid’s proof of the infinitude of the primes as a rap song (yes, I once asked ChatGPT to do this) one can’t claim that the LLMs lack the raw data they would need for the Prime Numbered Super Bowl test.

The problem is that, despite their immense read-only memory, and their prodigious ability to recognize and imitate patterns in their training data, they can’t go beyond these patterns to perform even the most basic leaps of logical thought. Since sportswriters discussing football scores on the internet don’t care if the score is a prime number or not, an AI trained on what these people have written can’t reliably apply the concept of prime numbers to a football score, even if it is told to do so. The LLM, in the process of blindly groping about for a textual response that will activate the right artificial neurons and trigger a “match” with the patterns in the training data, comes up empty.

Granted, it wouldn’t surprise me if somebody solved the Prime Numbered Super Bowl problem sometime in the next few years – it would require some improvements to the model’s ability to handle set intersections, but I know that it’s possible to “guide” ChatGPT to a correct answer by first asking it to list the primes between 1 and 100 and only later mentioning Super Bowls. Even so, there are plenty of ways to trip up an LLM even harder, as in this case of what might be the most perfect gobbledy-gook I have ever seen ChatGPT produce:

Me: List all of the US state capitals in alphabetical order, but spelled backwards, so for instance Jefferson City comes last since it ends with a Y.

ChatGPT: Here is the list of all U.S. state capitals, with their names spelled backwards and sorted alphabetically:

AdirolF (Florida – Tallahassee)

Alavretnom (Montpelier, Vermont)

Anatnom (Montana – Helena)

Anitsuga (Augusta, Georgia & Maine)

AtokaD (Dakota – Pierre, South Dakota & Bismarck, North Dakota)

AtreblA (Alberta – not a U.S. capital, but an interesting reversal!)

AvogacihC (Chicago – not a capital, but still fun to list!)

AwattuaT (Tauttawa – for Topeka, Kansas)

EladselE (Eldesla – for Salem, Oregon)

Emahnahs (Savannah – not a capital, but an interesting city!)….

The list continues in an equally absurd manner until 44 (not 50) “state capitals” have been listed, with the last entry being “TropeD – Detroit.”

If the “stochastic parrot” model of language generators is correct – and my own experience playing the Imitation Game, and playing it to win, suggests that it is – then this also explains why a common complaint about AIs is that, when they summarize a long piece of writing, they not only flatten out the author’s style, but they also make his or her opinions more conventional. Could we expect anything different from a machine that, by its very nature, responds to every prompt with the least creative response that’s consistent with its training data – and which responds to requests that don’t parallel anything in its training data by losing its mind entirely?

Realizing that the neurons in a deep learning network are activated by common patterns of words and pixels – and not by any process that resembles real inductive or deductive reasoning – might also help us to understand the futility of the celebrated “distillation” method which, according to DeepSeek’s Chinese creators, allowed them to train an AI as powerful as ChatGPT and Claude at a fraction of the price. The idea behind “distillation” is to train an AI on the outputs of one or more existing AIs in an attempt to “distil” the most valuable information.

If, however, the constraints of blind pattern imitation mean that an AI will (at best) be a little stupider and more conventional than the average of its training data, then all that “distillation” can accomplish is to create a new AI that’s a little stupider and more conventional than the first AI. This, I think, is exactly what DeepSeek is.

Perhaps you recall that earlier question that I put to ChatGPT that required it to know a very specific scene from the movie “Napoleon Dynamite?” ChatGPT answered it correctly, but DeepSeek was clueless; it said that the boys playing the game might have named the first spacecraft Napoleon after Napoleon Bonaparte, or perhaps Napoleon Dynamite, or perhaps for some other reason entirely; for Tina, it simply said it was “a casual, approachable name that contrasts with the grandeur of ‘Napoleon.’”

The Chinese model cannot simply be excused on the grounds that it is less familiar with American pop culture. When I simply asked it, “What is the name of Napoleon Dynamite’s llama?” it said “Napoleon Dynamite’s llama is named Tina. In the movie, Tina is a beloved pet, and Napoleon often takes care of her...”

No one who’s seen the movie is at all likely to use the hackneyed phrase “beloved pet” to describe Napoleon’s relationship with Tina. But when information is distilled through multiple layers of AI slop, clichés are often all that is left.

IV – A Machine in the Likeness of a Man’s Mind

A large language model, like any other deep learning algorithm, consists of millions of artificial neurons with billions of artificial synapses.

If, sometime in our future, a religious awakening like the one described in Frank Herbert’s Dune novels has given us a holy commandment that reads “Thou shalt not make a machine in the likeness of a man’s mind,” then the people working at OpenAI, Anthropic, and DeepSeek would be the greatest of infidels. After all, the resemblance between real and artificial neurons is quite deliberate; way back in the 1980s, people like Hinton, Bengio, and LeCun seized upon the idea that neuronal pattern-recognition abilities could be replicated by software, and they ran with that idea very far.

But what is really going on in the innards of a neural network? Well, whether the network is large or small, it will start with an “input layer” where the machine’s sensory data – be that text, pixels, audio, or something else entirely – is converted into a list of digital signals. From there the signals go to the first “hidden layer” of neurons, with mathematical rules derived from linear algebra dictating which combination of input signals will activate each hidden neuron.

If a neuron is stimulated above a certain activation threshold, it “turns on.” Then, using the pattern of active and inactive neurons in the first hidden layer, another set of artificial synapses – or, to speak less formally, another “pile of linear algebra” – activates the second hidden layer. If you include enough hidden layers, and train the AI for long enough, on a large enough set of training data, and you use techniques like back-propagation to adjust the synapse weights each time, then eventually you will get neurons deep in the hidden layers that activate in response to certain common patterns in the training data – a pianist playing Mozart, or a painting of a sunrise, or a string of words that describe what it’s like to fall in love.

Given enough raw computing power, these neural networks can perform remarkable feats. Do you want to beat the human chess champion? Just train your model on a few million chess positions, teaching it to classify them into positions where White eventually wins, positions where Black wins, and positions that are draws. It doesn’t even much matter if the first dataset comes from a crude chessbot that barely knows how to play. You can use those positions to train a better bot, and if you iterate through enough cycles, then after a few hours of “self-play,” a program like AlphaZero will not only be beating human champions, but it will also outplay even the best chess engines based on pre-neural-network technology.

The system of pattern-recognition that allows a “classifier” to work can also be turned inside-out to make a generative AI. Suppose that a very complicated model, such as ChatGPT, is trying to “make a painting of a violet biplane flying over an African savannah, with zebras drinking from a watering hole, a mountain visible in the distance, and the sun setting behind a pretty deck of clouds.”

It will make thousands or millions of potential images as it uses sophisticated statistical methods like the chi-squared test to determine which images produce the strongest signals in the neurons responsible for noticing visual patterns like “violet biplane,” “African savannah,” “zebras,” “watering hole,” “mountain,” and “clouds.” When small changes in the image make it fit the description better (with “goodness of fit” being defined in a mathematically rigid way involving cost functions) the model will push the image in that direction, until it finally arrives at a stable equilibrium where no more small changes will improve either the fit between the image and the prompt, or the internal coherence of the image.

What you end up with is a picture like this one – which (if you overlook the fact that the biplane’s propeller has just one blade) could be mistaken for the work of a very talented human artist.

If, however, I were playing the Imitation Game against ChatGPT, and trying to trip it up, I wouldn’t give it that prompt. Instead, I would think about the fact that even though human brains include neurons devoted to pattern recognition, this isn’t all that a human mind is made of. People (and cats too, if you believe Yann LeCun) also have faculties of inductive and deductive reasoning, making and executing plans, and understanding the relationships of objects and their properties in the physical world. And LLMs haven’t even scratched the surface of these abilities.

Thus, I would ask the machine to do something like “make an oil painting of a Christian Bible sitting on a lectern in a church surrounded by candles. The Bible is open to the beginning of the Book of Isaiah. Three ribbons have been placed in it to mark specific readings for the upcoming Sunday service - a golden ribbon marks Revelation 21, a blue ribbon marks Exodus 19, and a red ribbon marks Matthew 24.”

ChatGPT has obviously been trained on thousands and thousands of pictures of beautifully decorated churches with candles, stained glass windows, and bibles laying open on lecterns. It also knows the order of the books in the Bible, from Genesis to Revelation, and can even point you to the exact verse in the King James Bible where a given phrase appears, or do clever tricks like retelling the Parable of the Prodigal Son from the point of view of the fatted calf.

But it has no awareness that the specific chapters of scripture that the ribbons point to should determine their physical location in the image. In all of its blind groping through the patterns in its training data, in all of its ploughing through millions of potential images and seeing which ones trigger the neurons for concepts like “Bible,” “ribbon,” “candles,” and “church,” it has no way of figuring this out.

But perhaps you think that I am being too hard on my adversary. Perhaps I should only pounce when the machine ignores explicit instructions, and not when it merely fails to make logical inferences about where an object should be placed. In which case I will present you with another example of ChatGPT proving itself unable grasp the relationship between objects and their properties.

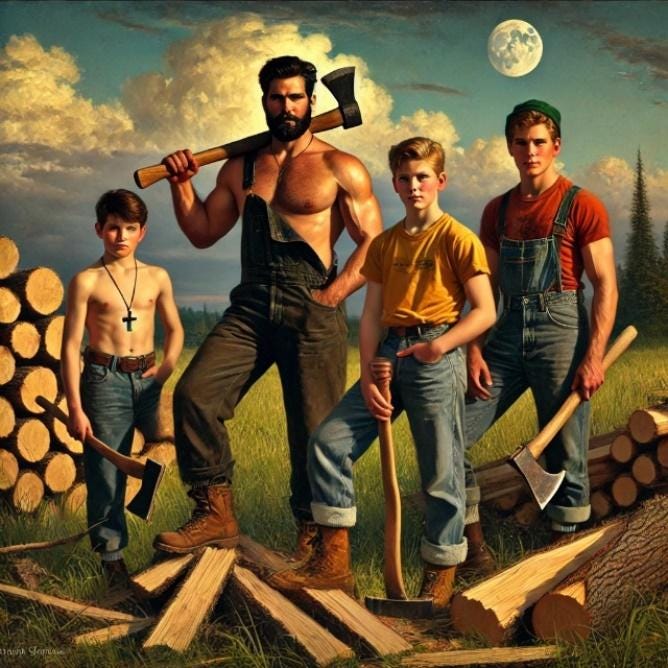

Make an oil painting depicting a farmer and his three muscular sons (aged 16, 12, and 9) standing in a meadow in front of a pile of firewood they have just split. They all hold axes, save for the youngest boy who holds a small hatchet; the 16yo holds his ax in his left hand but the others hold theirs in their right. The father wears overalls; the older two boys are barechested and the youngest wears a saffron t-shirt. The younger two boys wear denim shorts but the eldest wears brown trousers. The father and the eldest boy wear boots but the younger two wear sneakers. The father and the 12yo have dark brown hair but the other two boys are blond. The father has a beard but no moustache, and the oldest boy wears a cross on his neck. The sun is setting beneath a deck of cirrus clouds and the first quarter moon is visible in the sky.

This is the best that the machine could do out of several dozen attempts. Indeed it is the only one where everybody is holding a hatchet or an axe. And if you ignore the fact that the youngest child is missing a leg, you could even pass this off as a human artist’s work – provided that, like in Scott Alexander’s misnamed “AI Art Turing Test,” the person you’re trying to fool doesn’t know the prompt.

Meanwhile, the computer’s worst attempts at making this picture had two, four, or even five boys instead of three, and the funniest one had the cross around one youngster’s neck accompanied by a giant cross hovering omen-like in the sky, as if this were a painting of Constantine at the Milvian Bridge.

To someone who understands the limits of artificial neural networks, it is not hard to guess what is going on. A lot of the right neurons were activated. The machine can’t tell, or doesn’t care, that the youngest boy is shirtless instead of the older two, that the wrong boy is wearing the saffron t-shirt, or that the wrong boy is wearing the cross; all of these qualities appear in the image somewhere, so enough of the right neurons get activated. Other deviations, like the father having both a beard and a moustache, two figures rather than one wearing overalls, and nobody wearing sneakers or denim shorts, were apparently not fixable within mathematical limits – or in other words, nothing better could be found in whatever region of the N-dimensional solution set the algorithm was wandering around in, as it searched for a local minimum for its cost function.

The “first quarter moon” was likewise overlooked. (I have found, after a great deal of experience, that full moons are much more likely to activate the model’s “moon” neurons, whatever the actual text of the prompt might have said.)

V – To See Or Not To See

I am aware that AI maximalists may find all of this unpersuasive. They may think that it’s unfair for me to overload the model with so much detail when I ask it to make a picture, much like it would be unfair to make a second-grader sit through exams meant for an eighth-grader and then declare that that child will never reach an adult level of intellect. And yet, over and over again, I have seen AIs fail at the Imitation Game in ways that revealing a set of strengths and weaknesses radically different from those of any human being.

No human being would be capable of reciting all the Super Bowl scores from memory, and also of listing hundreds or perhaps thousands of prime numbers, and even of breaking out into a song about why the primes are infinite – only to spout clueless nonsense when asked to list the prime numbered Super Bowl scores. (The fact that the AI models that do this can also breeze through college-level mathematics exams should change your opinions about the exams, not the AIs.)

Neither could a human being execute professional-quality oil paintings without knowing how many people are in them, or which figures are wearing shirts, overalls, or sneakers, or whether the moon in the sky is a quarter moon or a full moon. (To add to the confusion, prompts that are just a line or two long and mention the moon often result in an image with multiple moons, as if the brevity of the instructions means that the machine needs to activate the “moon” neuron good and hard before it’s convinced it has fulfilled the prompt.)

In short, all of my own experiences with artificial neural networks have convinced me that they are imitating only a small part of a human mind. They have an inbuilt, read-only memory that’s far larger than anything that we human beings possess, and they have a truly impressive power to engage in a sort of blind or at least mindless pattern recognition and pattern matching. And such a tool, when used by human engineers who are aware of its limits, is likely to have numerous scientific and commercial applications.

At the same time, there are many more parts of a human mind that AI researchers have not even begun to emulate – the parts that allow us to act outside of common clichés, to make a mental model of how objects’ properties govern their interactions in the physical world, to make and execute complex plans, or to distinguish between the careful completion of a task and the mere imitation of a common pattern.

This, I think, is why when small drones finally became an important military technology (as seen in the ongoing Russia-Ukraine War) we did not see swarms of drones controlled by AI making human soldiers obsolete – the outcome almost universally dreaded (or perhaps hoped for) by AI maximalists. Instead, we saw FPV drones controlled one-at-a-time by common soldiers, who had to put as much skill and initiative into their use as they would into using rifles, hand grenades, bazookas, or any of the other weapons that make infantrymen the heart of any serious war effort.

Nonetheless, AI researchers who take the maximalist position will doubtless keep on finding ways to avoid the realizations that seem so obvious to me and to Yann LeCun. They will continue to insist that artificial general intelligence is just around the corner, and that within a decade or two, machines will exist that are smarter than human beings in just about every measurable way.

But one must remember that these people, like most experts and specialists, have their own biases. A typical expert wants to believe that his field of research is extremely important. He wants to believe that the coming AI singularity has the potential to produce either a utopia or a dystopia – and that which kind of future we get will depend on whether government and corporate decision-makers pay enough attention to him and to his research. Meanwhile, the idea that improvements in deep learning might not produce any world-changing events at all is psychologically much harder to grasp.

And yet, as one can see if one plays the Imitation Game aggressively, there are big limits to what one can do by simply adding more and more computing power to a machine that mimics such a limited range of mental functions. To say that these neural networks are about to become the masters of the earth is a wild exaggeration.

It would have been no less strange for someone living in the late 19th century to observe that a steam shovel has hundreds of times the digging power of a man equipped with hand tools, and then conclude that, by making simple, iterative improvements to that steam shovel, one could build a machine that performs any task done by a human hand.

“The steam shovels of today,” this person might say, “can only dig. But the steam shovels of tomorrow, with their thousands of horsepower, will be able to weed a garden better than you or I, and after that there will be steam shovels that can lay bricks, and pick cherries, and play the violin, and repair a watch, and caress a lover, all with super-human finesse and skill.”

To construct a tool to which one can outsource some functions of the human mind is hardly new. For thousands of years we have been doing it with our memory – indeed even before the written word, there were sticks into which a notch was carved each night to count the days in a lunar month. Generative AI will doubtless be a revolutionary technology for those who use it regularly. But after seeing its limitations, can anyone really believe that it is big of a turning point as the invention of the alphabet or the printing press? To me it seems that even Google and other first-generation web services, not to mention the cell phone, have done much more to impact the way that we live our daily lives.

But faith in the imminence of the singularity is likely to continue. People like to believe that they are living at the turning-point of history; there is a reason that religions like Christianity and Islam so often give rise to millenarian cults, and why even secular philosophers like Karl Marx could inspire millions of people to action with purely atheistic theories of why it was inevitable that existing social institutions were about to collapse and be replaced with something vastly different. Even ideologies that promise pure doom, with no hope of salvation, can still appeal to some people’s sense of moral pompousness, since it’s a heady feeling to be part of the elite few with their eyes open.

And the AI myth also has its own particular draws. For instance, the idea that “AI Alignment” is some sort of engineering problem – i.e. that if AI researchers apply math and science properly, they can create an oracular machine that reliably acts in mankind’s best interests – provides a sort of shallow escapism from real life, where morality is complicated, and where human beings can’t even reach a consensus among themselves about what mankind’s best interests are.

Likewise, believing that scientists have mostly figured out how the mind works, and are only a few technical steps away from replicating it in silico, lets people avoid admitting just how mysterious cognition, consciousness, and free will really are.

Demystifying the workings of mind and spirit releases us from our sense to duty to whatever higher Power gave us that mind and spirit in the first place. And it also makes it easier to hide from the guilt that comes from admitting how frequently and how recklessly modern societies tamper with the workings of the human mind, often in pursuit of petty ends – think of the way that anti-depressants and anti-anxiety drugs are used to mask the loneliness that comes from marrying and having children at much lower rates than any previous society, or of the millions of elementary school children who will have to deal with lifelong amphetamine dependencies after they got diagnosed with ADHD, due to struggling to sit still at a desk for eight hours on end and work through their allotted share of busy-work at neither a slower nor a faster pace than their classmates.

I think that the time will come when our descendants look with amusement on the belief – a belief held with apparent sincerity by many otherwise-intelligent people – that machines which are basically an overpowered autocomplete have a better-than-even chance of overthrowing the human race.

But I don’t think that this belief is going to go away quickly – after all, it takes a bit of work and skill to play the Imitation Game for oneself, and to see just how strong the evidence is that large language models are only imitating a small part of the human mind. And there are plenty of people who, for various psychological reasons, want to believe in the fantasy of AGI, and who are unwilling to do the work and see the truth for themselves.

The good news is that, with so many top-of-the-line AIs being free for public use, any curious person can play the Imitation Game against Claude, DeepSeek, or ChatGPT, and can test out a variety of strategies as he or she learns to play the game to win. And those of us with the curiosity to do this will not remain in the dark about what AI really is, or how it works, or why it’s less important than most AI researchers think.

To enjoy more of ’s writing, be sure to subscribe to his eponymous substack, Twilight Patriot.

AI may surpass human intelligence as humans dumb themselves down by using AI too much.

The whole vibe coding thing has me groaning. Microsoft has been trying to speed development using generated code going back to Microsoft Foundation Classes at least. Yes, using the code generators someone new to MFC could throw together a simple drawing program in a few hours -- without fully understanding what he wrote. And dependence on generated code made such code far harder to maintain than code written by humans from scratch.

Ruby on Rails used auto generated code. Wonderful for throwing something up quickly. But Rails programmers started getting big salaries for a reason. And 37 Signals dropped all but one of their product lines for some reason...

Time to pick up a case of Brawndo.

Well written!

The issue with the Maximalist position is that it endows to Artificial Intelligence an Exogenous quality, which no other Technology has ever shown. The argument seems to be that there is something uniquely ineffable about "Intelligence" which makes that leap (i.e. into Exogeny) warranted. You see this, especially with some Philosophers nowadays who want to argue that "Intelligence" is a Fundamental of the Universe & is thus very Important.

The implication of AI being this uniquely Exogenous Technology is (to put it politely) extremely Naive. There is no indication that 'Artificial General Intelligence' or whatnot is somehow unbounded by the same Energy-Material flows undergirding all specific Technologies & Technological regimes. If so, there is nothing special about 'Machines' of said sort, & so whatever people say about their 'ability to think'... collapses into the void known as Energy-Material Outages, which is the fate of all relatively Mature Technologies.

Technophiles are free to disagree, but there are no exceptions to these rules, nor should we expect any, given the fundamental Limits to Growth we observe all around us.