Well, That Happened Fast

Sequoia Capital and Nature Declare AGI Is Here, and Maybe It Is

Five weeks ago, in my article Predictions and Prophecies for 2026, I wrote:

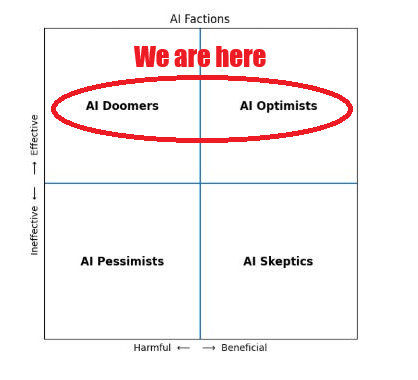

My prediction is that over the course of 2026 we will see a convergence around AI’s effectiveness on the y axis and a divergence of opinion on the x axis, such that people will be increasingly split into optimist factions and doomer factions. Skepticism about the power of the technology will give way to skepticism about the benefit and/or sustainability of the technology.

If you didn’t read Predictions and Prophecies for 2026, you should do so now. The convergence of opinion is happening a lot faster than I had expected and that means the follow-on effects I outlined in that article will follow fast, too.

The idea that #ItsHappening doesn’t sit well with a lot of people and I know there’s going to be pushback on this. Therefore I’m going to break this article into two parts. The first part asks “Are opinions actually converging?” and the second part asks “Are those opinions actually correct?”

Are Opinions Actually Converging that AI is Effective?

When AI pundits discuss the effectiveness of AI, it often involves asking whether AI has achieved general intelligence and become AGI (Artificial General Intelligence). AGI is seen as the stepping stone towards ASI (Artificial Super Intelligence).

Not even three weeks after I published Predictions and Prophecies for 2026, Sequoia Capital released a white paper called 2026: This is AGI” with the provocative header “Saddle up: Your dreams for 2030 just became possible for 2026.”

The authors, Pat Grady and Sonya Huang, write:

While the definition is elusive, the reality is not. AGI is here, now… Long-horizon agents are functionally AGI, and 2026 will be their year…

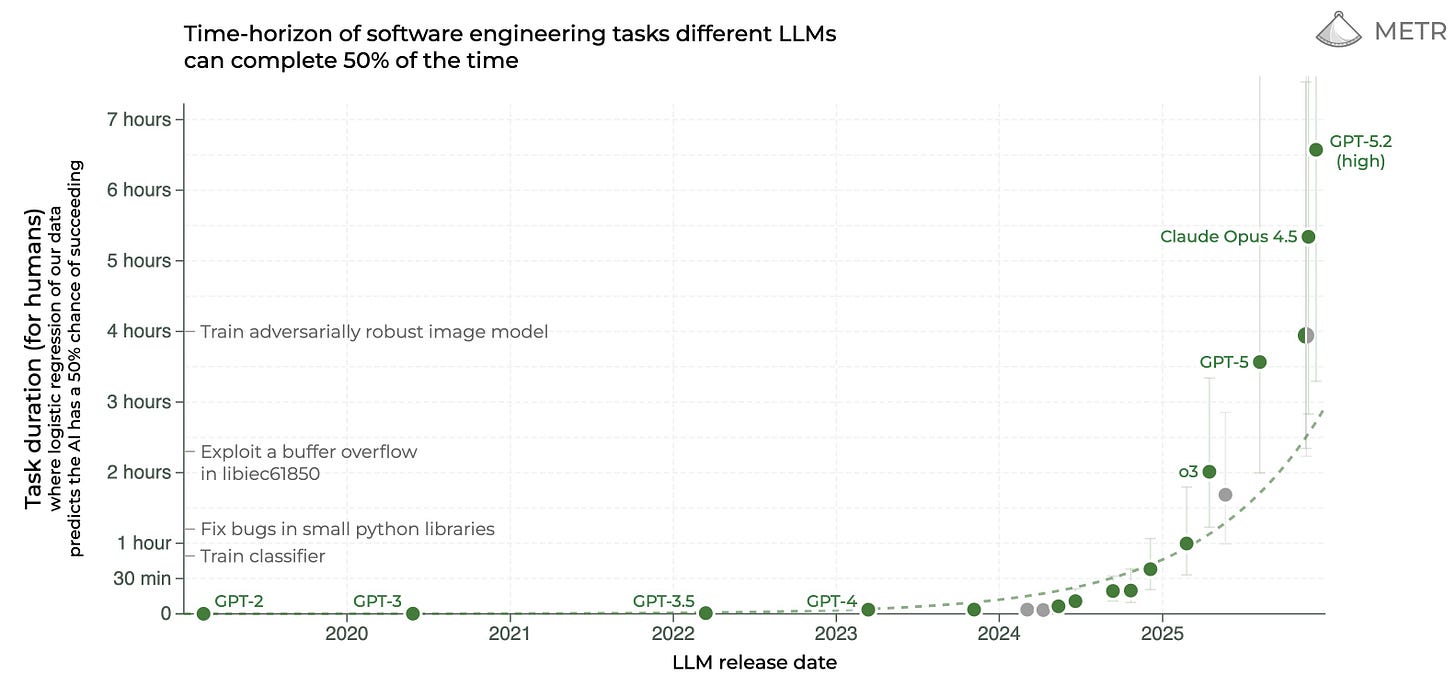

If there’s one exponential curve to bet on, it’s the performance of long-horizon agents. METR has been meticulously tracking AI’s ability to complete long-horizon tasks. The rate of progress is exponential, doubling every ~7 months. If we trace out the exponential, agents should be able to work reliably to complete tasks that take human experts a full day by 2028, a full year by 2034, and a full century by 2037…

It’s time to ride the long-horizon agent exponential… The ambitious version of your roadmap just became the realistic one.

So Sequoia Capital’s opinion is that long-horizon agents have arrived and are functionally AGI.

The obvious retort to this is “who cares what Sequoia Capital thinks?” But that’s a bad retort when we’re discussing convergence of opinion. Sequoia Capital are the primary architects of the modern tech landscape. Since 1972, they have consistently identified and funded the "defining" companies of every era, from Apple and Atari in the 70s to Google, NVIDIA, WhatsApp, and Stripe in the decades that followed. To put their influence into perspective, companies they backed currently account for more than 20% of the total value of the NASDAQ. When Sequoia publishes an investment thesis, the entire venture capital industry pivots because their track record of predicting where the world is going is virtually unmatched. Dismissing their opinion is like dismissing the GPS in a terrain they’ve spent 50 years mapping. If there’s one venture capital firm in the world that represents The Cathedral of Opinion, it’s them.

Just over two weeks after Sequoia’s white paper, Nature published a Comment entitled “Does AI already have human-level intelligence? The evidence is clear.”

The Nature authors write:

By reasonable standards, including Turing’s own, we have artificial systems that are generally intelligent. The long-standing problem of creating AGI has been solved…

We assume, as we think Turing would have done, that humans have general intelligence… A common informal definition of general intelligence, and the starting point of our discussions, is a system that can do almost all cognitive tasks that a human can do… Our conclusion: insofar as individual humans have general intelligence, current LLMs do, too.

The authors go on to provide what they call a “cascade of evidence” for their position. (Read the article). They also rebut the common counter-arguments. I want to give particular attention to their critique of the notion that LLMs are just parrots:

They’re just parrots. The stochastic parrot objection says that LLMs merely interpolate training data. They can only recombine patterns they’ve encountered, so they must fail on genuinely new problems, or ‘out-of-distribution generalization’. This echoes ‘Lady Lovelace’s Objection’, inspired by Ada Lovelace’s 1843 remark and formulated by Turing as the claim that machines can “never do anything really new”1. Early LLMs certainly made mistakes on problems requiring reasoning and generalization beyond surface patterns in training data. But current LLMs can solve new, unpublished maths problems, perform near-optimal in-context statistical inference on scientific data11 and exhibit cross-domain transfer, in that training on code improves general reasoning across non-coding domains12. If critics demand revolutionary discoveries such as Einstein’s relativity, they are setting the bar too high, because very few humans make such discoveries either. Furthermore, there is no guarantee that human intelligence is not itself a sophisticated version of a stochastic parrot. All intelligence, human or artificial, must extract structure from correlational data; the question is how deep the extraction goes.

The latter argument is essentially the same point I made in my essay “What if AI isn’t Conscious and We Aren’t Either?” Contemporary neuroscience and physicalist philosophy have aligned around a neurocomputational theory of mind that describes both human and machine intelligence in similar terms. Scientists cannot easily dismiss artificial general intelligence from within their paradigm without dismissing our own. The logic of their own position dictates that if we have general intelligence, so do LLMs, and if LLMs don’t, then we don’t either.

Again, the obvious retort to this is “well, who cares what Nature says?” But that’s again a bad retort when we’re discussing convergence of opinion. For over 150 years, Nature has been the ultimate gatekeeper of scientific legitimacy. Its articles signal to the global elite which technologies are ready to transition from experimental code to world-altering infrastructure. When Nature says AGI is here, that means government regulation, international ethics standards, and massive institutional funding in ways a technical paper in a specialist journal never could. Nature is the venue where concepts are either codified into the scientific consensus or relegated to the fringe. And right now, Nature is codifying AGI into the scientific consensus.

So the world’s most important venture capital firm and the world’s most prestigious scientific journal are both saying the same thing: AGI is here, right now.

Are These Opinions Actually Correct?

Ah…. But are they right? Has AI become AGI, or is this just hype? One of the disturbing dilemmas of the present-day is the ability of our elites to establish and maintain strongly-held opinions that simply… do not represent reality. “Children just aren’t going to know what snow is!” “Globalization is inevitable!” And so on.

At this point I would like to reassure that you AI is just tulips, it’s just pets.com, it’s just hype, there’s no there there, your jobs are safe, and nothing is really happening. I Unfortunately I cannot do that, because to me it seems like something is happening.

On January 30th, videogame stocks plummeted. Take-Two Interactive (TTWO.O) fell 10%, Roblox (RBLX.N) fell 12%, and Unity Software (U.N.) dropped 21%. Why? Because Google rolled out Project Genie, an AI model capable of creating interactive digital worlds.

The article notes:

“Unlike explorable experiences in static 3D snapshots, Genie 3 generates the path ahead in real time as you move and interact with the world. It simulates physics and interactions for dynamic worlds,” Google said in a blog post on Thursday.

Traditionally, most videogames are built inside a game engine such as Epic Games’ “Unreal Engine” or the “Unity Engine”, which handles complex processes like in-game gravity, lighting, sound, and object or character physics.

“We’ll see a real transformation in development and output once AI-based design starts creating experiences that are uniquely its own, rather than just accelerating traditional workflows,” said Joost van Dreunen, games professor at NYU’s Stern School of Business.

Project Genie also has the potential to shorten lengthy development cycles and reduce costs, as some premium titles take around five to seven years and hundreds of millions of dollars to create.

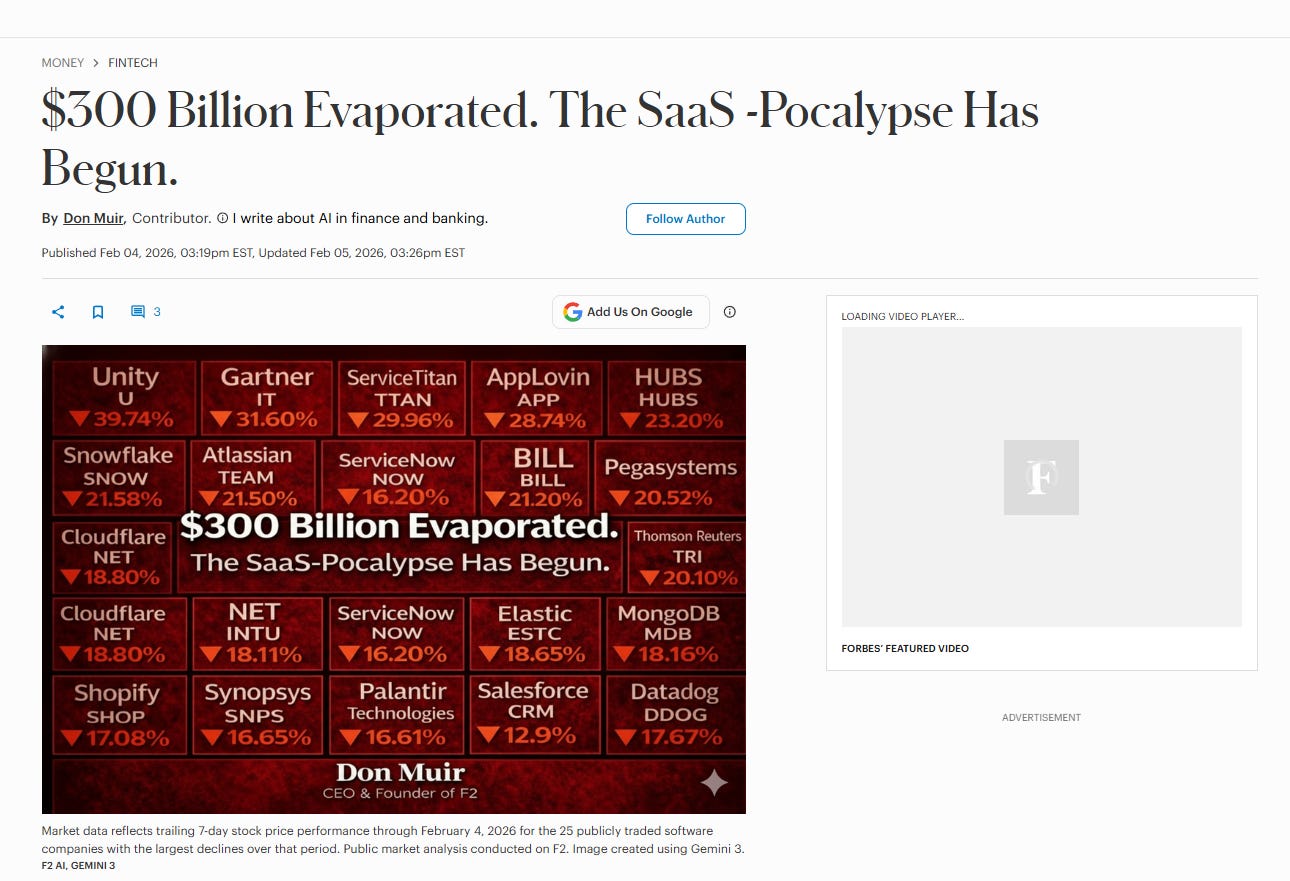

Then, just two days ago, February 4th, SaaS stocks crashed. Forbes declared “The SaaS-Pocalypse Has Begun.” $300 billion evaporated from the stock market. Why? This crash was triggered by Anthropic’s release of 11 open-source Claude plugins for legal/compliance workflows. These agents automate billable-hour tasks, breaking the “seat-based” model that powered SaaS giants. Thomson Reuters dropped 18%, LegalZoom dropped 20%, and the S&P Software Index fell 15%, the worst since 2008.

The Forbes article explains:

For most of the past two decades, enterprise software benefited from a remarkably stable economic story. Software was expensive to build. Switching costs were high. Data lived in proprietary systems.

Once a platform became the system of record, it stayed there. That belief underpinned everything from public market multiples to private equity buyouts to private credit underwriting. Recurring revenue was treated as a proxy for predictability. Contracts were assumed to be sticky. Cash flows were assumed to be resilient.

What spooked investors last week was not that AI can generate better features. Software companies have survived feature competition for years. What changed is that modern AI systems can replace large portions of human workflow outright. Research, analysis, drafting, reconciliation, and coordination no longer need to live inside a single application. They can be executed autonomously across systems.

Both Reuters and Forbes are re-stating the arguments that Sequoia and Nature made above. AI platforms are now capable of autonomous long-form activity, and that development is going to impact everything.

Why does this matter? Because when people predict a major market crash from AI, they are generally asserting that AI is a bubble that’s going to burst. They are arguing that AI will be proven fake and that AI valuations will crash. But that’s not what’s happening here at all. What’s happening is that all the other stocks are crashing. The market is signaling that AI is so real that it’s deconstructing the rest of the economy.

Admittedly, the stock market is just a social construct and as such it cannot be used as evidence for reality. The fact that AI releases are causing other sectors to crash could just be evidence of the persuasive power of Nature-Sequoia type elite opinion. This could just be Exxon crashing after Greta Thunberg warns against the dangers of Co2 emissions at the UN. But it could be evidence that there’s something real happening in consumer-producer behavior. This could be Borders Books crashing because people really have switched to buying books on Amazon.

Which is it? I think it’s more Borders than Exxon. Anthropic didn’t issue a press release, it actually dropped Claude plugins that do what junior associates do: review contracts, flag compliance issues, draft memoranda. Project Genie didn’t just promise to eventually generate interactive worlds, it generated them, on camera, in real time. Stocks aren’t crashing based on projections of future disruption, they’re discounting based on disruption that have already happened.

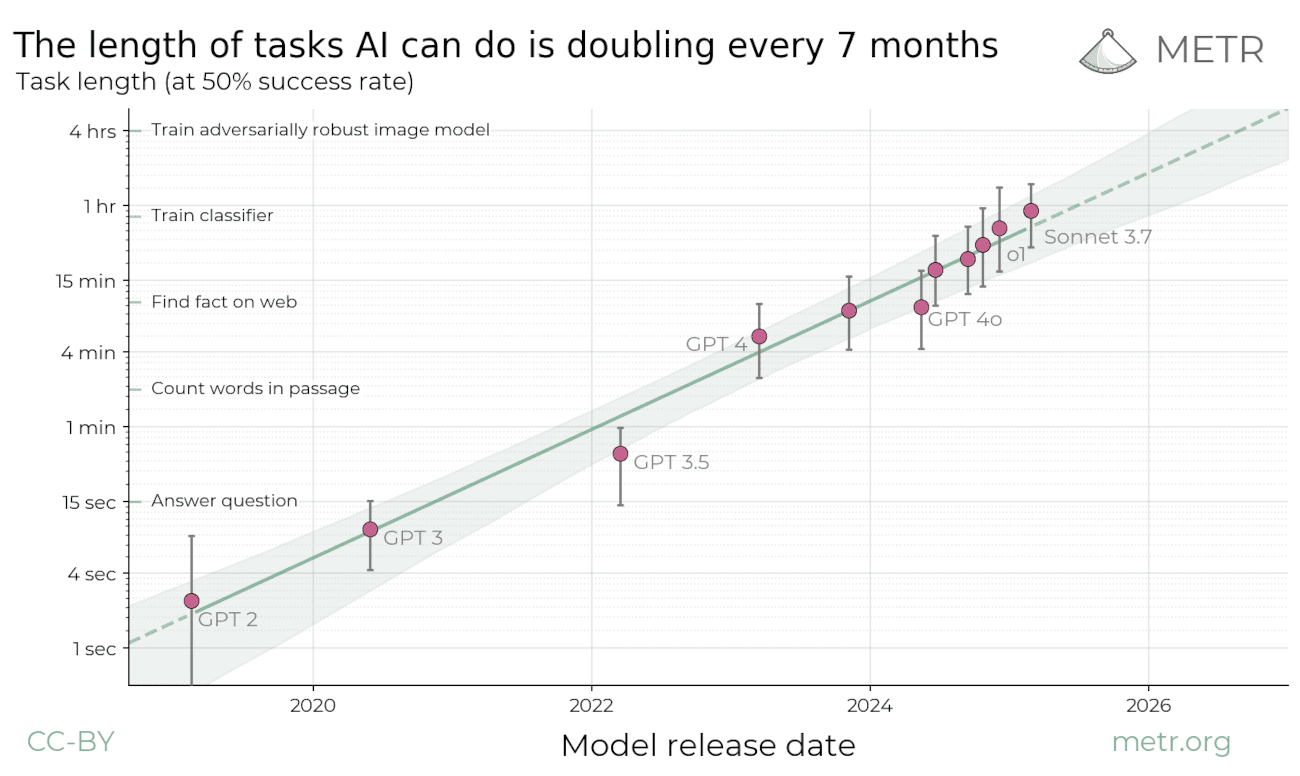

And there’s more disruptions happening still. On February 4th 2026, METR (Model Evaluation & Threat Research) released its latest study of the time-horizon for software engineering tasks that can be completed with 50% success by LLMs. This chart has been called “the most important chart in AI.” It’s the one Sequoia Capital referenced, which I quoted above and will re-quote again:

If there’s one exponential curve to bet on, it’s the performance of long-horizon agents. METR has been meticulously tracking AI’s ability to complete long-horizon tasks. The rate of progress is exponential, doubling every ~7 months. If we trace out the exponential, agents should be able to work reliably to complete tasks that take human experts a full day by 2028, a full year by 2034, and a full century by 2037…

This is what METR’s “Task Length (50% success rate) chart looked like in March 2025, when it predicted that the length of tasks would double every 7 months:

And this is what the chart looks like as of today:

In other words:

Tick tock. Tick tock.

Within 24 hours of METR’s Task Length chart going vertical, the chart became obsolete. METR was analyzing the last generation of models. That hockey stick you’re seeing is based on Claude Opus 4.5 and GPT 5.2.

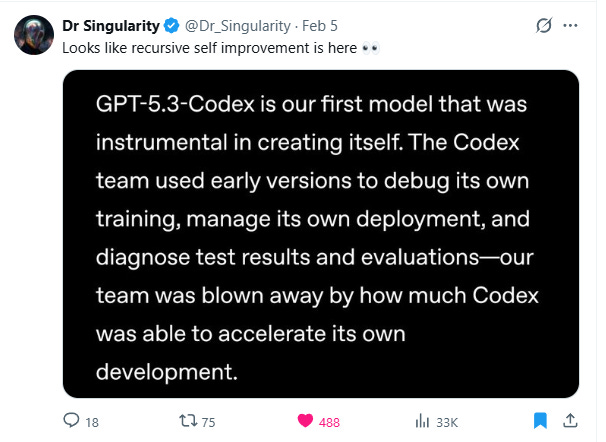

Yesterday, February 5th 2026, Anthropic released Claude Opus 4.6 and OpenAI released GPT-5.3-Codex. Opus 4.6 improves on 4.5 with better planning, reliability in large codebases, code review, debugging, and sustained long-horizon tasks. It introduces a 1M token context window in beta and features “agent teams” in research preview, allowing coordinated multi-agent collaboration on complex projects. GPT-5.3-Codex is an upgraded coding model that combines enhanced coding performance from GPT-5.2-Codex with improved reasoning and professional knowledge from GPT-5.2.

According to OpenAI, GPT 5.3 was “instrumental in creating itself.” It is the first recursively self-improving model. Pause on that for a moment. This is not a marketing claim about AI-assisted coding in general. OpenAI is asserting that their model materially contributed to the engineering of its own successor. If true, this is the first confirmed instance of recursive self-improvement.

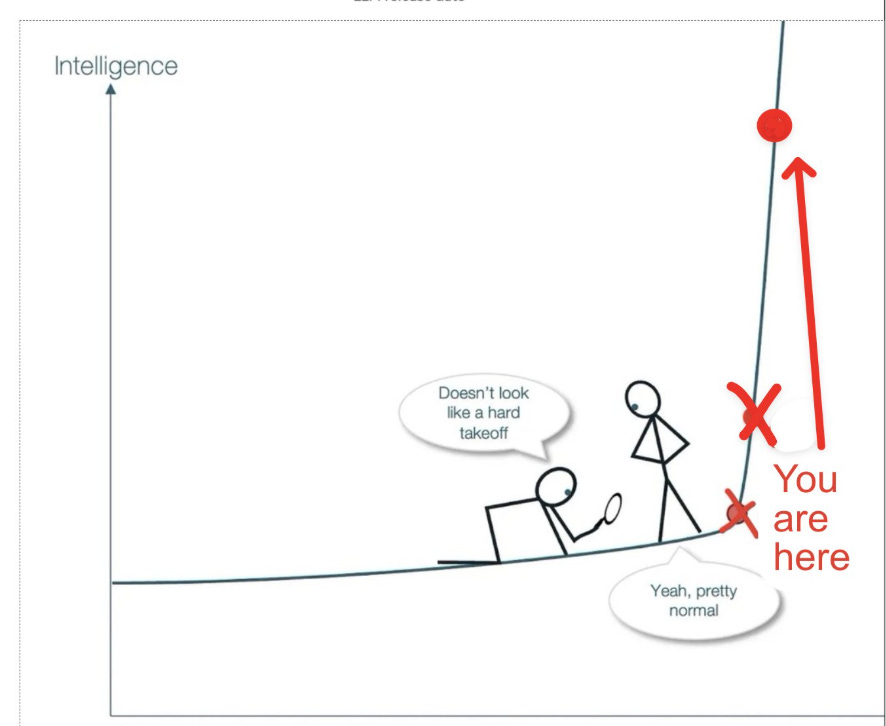

AI theorists have long identified recursive self-improvement as the inflection point between linear progress and exponential takeoff. Every prior model on METR's chart was built by human engineers, with AI serving as a tool. GPT 5.3 appears to be the first model that served as a collaborator in its own creation. The distinction matters, a lot. Tools improve at the rate their users improve. Collaborators improve at the rate they themselves improve. That is a fundamentally different dynamic, and it's the one the "fast takeoff" literature has been warning about for two decades.

Plan accordingly. Plan accordingly even if you disagree. You might not have agreed that COVID-19 was a deadly epidemic, but you still got locked down and told to wear a mask and get jabbed. You might not have agreed that climate change was real, but Europe still deindustrialized because of it. Elite consensus reshapes the world whether it reflects reality or not. And the elites are planning on AI.

As for me, I’m not taking much comfort in the foolishness of our elites. Unlike their climate predictions, which operated on century-long timescales conveniently beyond falsification, their AI predictions are being tested in real time, and they keep coming true. The more pressing question in my mind is which of the AI predictions will come true… the very good ones or the very bad.

Talk of LLMs, AGI, & “recursive self-improvement” consistently ignores first principles that have constrained every complex system.

Everything (emphasis on “thing” 😉) reduces to four binding constraints… namely energy, materials, demography, and ecology… none of which can be hand-waved away by software progress or clever abstractions… for these things ultimately exist only via the interplay of the former four fundamentals… & so Unless one of those constraints is breached in a thermodynamically unprecedented way, their interaction inevitably generates negative feedback loops that dominate outcomes:

The intelligence-explosion narrative rests on a basic category error… whereby it treats recursive self-improvement as if it can operate independently of the physical world, which itself supervenes on said fundamentals aforesaid:

& this is why Sustained exponential growth doesn’t exist outside simplified models; in reality, exponentials are transient phases that resolve into logistic curves once constraints assert themselves & we see the negative hits crush all growth yielding hysteresis…

For this specific talk of “recursive self improvement” in particular… Compute requires energy, & said energy requires infrastructure, with said infrastructure then requiring materials & labor…

All of that then depends on demographic capacity & political stability. 😘 (Hint— These are already dead 🥰):

Materials are finite, demographics are aging or shrinking, ecological limits are non-negotiable, & each layer constrains the next through hard feedbacks….

& hence, every supposed “runaway” dynamic activates countervailing pressures that slow growth, raise costs, increase fragility, & impose ceilings in an inevitable, DOOM-ed & Destined manner 😍

The relevant question isn’t whether intelligence can improve itself in theory, but whether intelligence can outrun the systems that physically instantiate it to begin with… systems without which nothing else can even prevail anyhow:

Thermodynamics, History & Theology… answers that question clearly & repeatedly, time & time again:

All Growth eventually saturates, negative feedbacks dominate… & limits reassert themselves…. 😊

“Recursive self-improvement” is thus not a law of nature; constraints are… & they will simply make themselves felt this century as the 🌍 moves decisively into Negative Sum conditions & Perpetual Conflict, coupled with Low Energy Dominance.

Tl;dr— Pater OPTIMIST confirmed! 😘 🥰😍🤭

One doubts. In fact, one disbelieves. I'll have to read the papers.

E.g., these reasons. https://wmbriggs.substack.com/p/the-limitations-of-ai-general-or